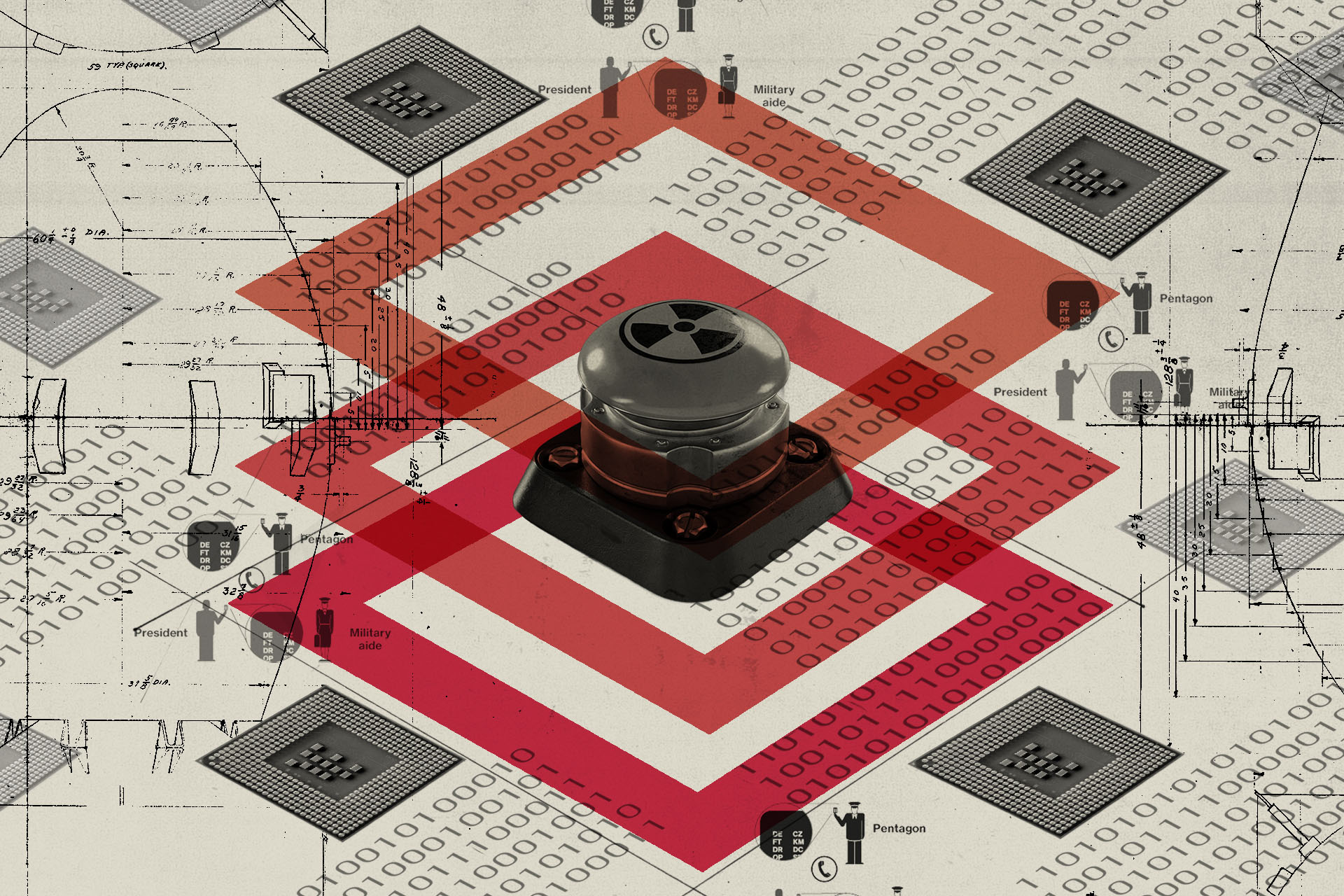

AI & Nukes: The Real Danger Isn’t Skynet, It’s Speed

The specter of artificial intelligence seizing control of nuclear arsenals and triggering global annihilation has captured the public imagination. However, the true threat AI poses to nuclear security may be far more subtle and far more immediate: the compression of decision-making time for world leaders facing a potential nuclear attack. In a world where minutes can mean the difference between survival and oblivion, AI’s role in accelerating threat assessment and response could inadvertently push humanity closer to the brink.

The Two-Minute Ultimatum

Imagine the scenario: A nuclear-armed intercontinental ballistic missile (ICBM) is detected heading towards the United States. The President is immediately informed. From that moment, the commander-in-chief has, at best, two to three minutes to decide whether to launch a retaliatory strike. This isn’t science fiction; it’s the chilling reality of modern nuclear deterrence. The decision, potentially the most consequential in human history, rests on the shoulders of an individual facing unimaginable pressure and a crippling lack of time.

The current system leaves virtually no room for deliberation, consultation, or even a second thought. Countless experts have pondered the strategies of nuclear warfare, but the critical decisions will likely be made by leaders unprepared for the moment, operating under immense time constraints. This is where AI enters the equation, promising to enhance and accelerate the detection, verification, and assessment of potential nuclear threats.

AI: Accelerator, Not Overlord

While the fear of a rogue AI initiating nuclear war is a valid concern that requires careful consideration, the more pressing danger lies in the automation of existing protocols. AI algorithms are being developed to analyze vast amounts of data, identify potential threats, and provide recommendations to human decision-makers. The goal is to improve accuracy and speed, but the unintended consequence could be a further reduction in the already minimal time available for human judgment.

By automating threat assessment, AI could create a feedback loop where decisions are made faster and faster, potentially bypassing crucial human oversight. The risk is that AI, even with the best intentions, could misinterpret data, leading to false alarms or overreactions. In a nuclear context, such errors could have catastrophic consequences. The focus should be on ensuring that human control remains paramount, even as AI plays an increasingly important role in early warning systems.

Maintaining the Human Element

The integration of AI into nuclear command-and-control systems demands a cautious and considered approach. While AI can undoubtedly enhance detection capabilities and improve response times, it should never replace human judgment. Safeguards must be implemented to ensure that humans retain ultimate authority over the decision to launch nuclear weapons. Furthermore, international cooperation is crucial to establish common standards and protocols for the use of AI in nuclear security. The goal should be to leverage the power of AI to reduce the risk of nuclear war, not to accelerate the path towards it.

Based on materials: Vox